The AI Monopoly Problem. What To Do

Why I'm Betting $8,000 on Decentralized Computing.

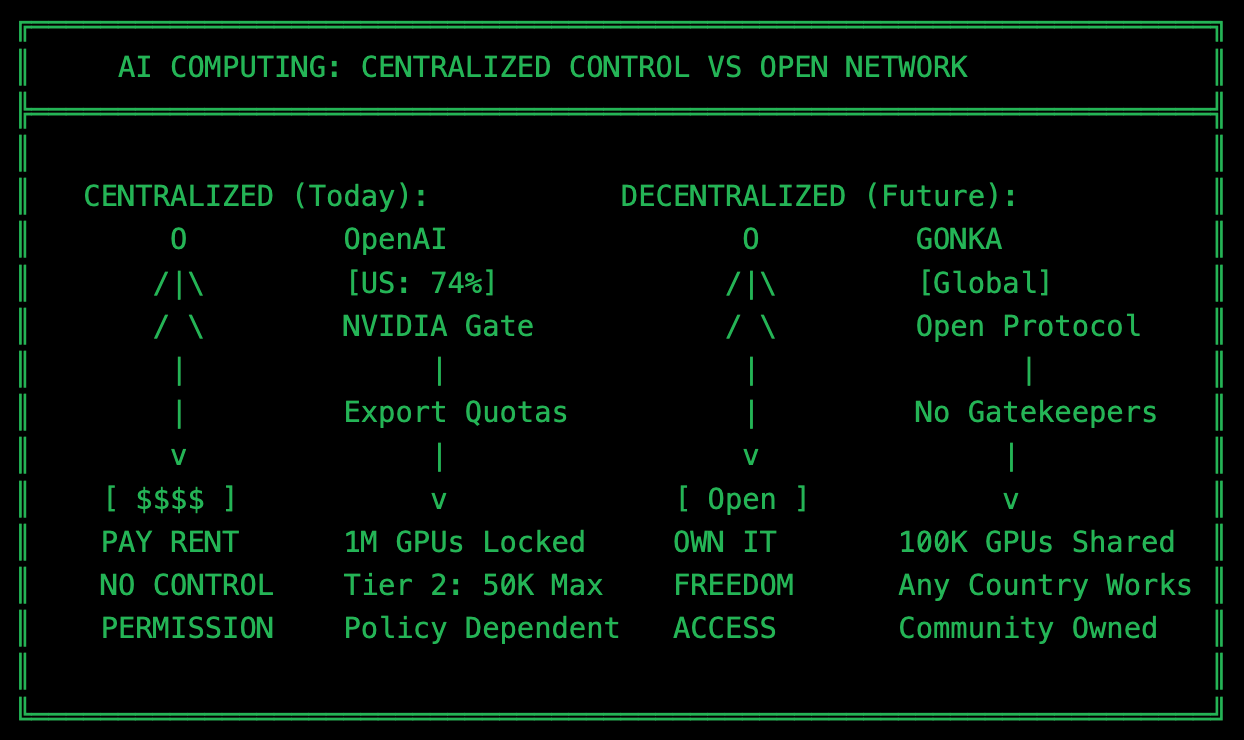

Three years ago, the US controlled 25% of global AI computing power. Today? 74%1. Meanwhile, countries like Kazakhstan, Vietnam, the UAE, and Bhutan sit on “thousands of GPUs” facing export quotas of 20,000-50,000 chips maximum2 — while OpenAI alone runs roughly 1 million GPUs3.

Sound familiar? We’ve seen this playbook before. Proprietary networks versus open protocols. AOL versus the Internet. Centralized control versus distributed infrastructure.

The question is: are we watching the birth of AI colonialism, or the beginning of something different?

The Monopoly Nobody’s Talking About

Biden’s 2025 AI Executive Order doesn’t just regulate models above 1 trillion parameters — it creates a global tiering system4. Tier 1 countries get unrestricted access. Tier 2 faces caps of 49,901 H100-equivalent GPUs through 2027 (doubled to 99,802 if they “align” with US export goals). Tier 3 — China, Iran, Russia — gets nothing.

NVIDIA controls 90% of the AI chip market5. The US hosts 4,049 data centers versus China’s 3796. In 2024 alone, America added 5.8 gigawatts of data center capacity — the EU added 1.6 GW, UK added 0.2 GW7.

This isn’t a competitive advantage. This is architectural control.

What pisses me off isn’t the numbers. It’s the trajectory. Companies using OpenAI to build products are essentially training their future competitor — one they can never own, never audit, never truly control. Countries investing in AI development hit artificial ceilings not because of technology, but because of policy.

Why Decentralized Networks Aren’t Crazy

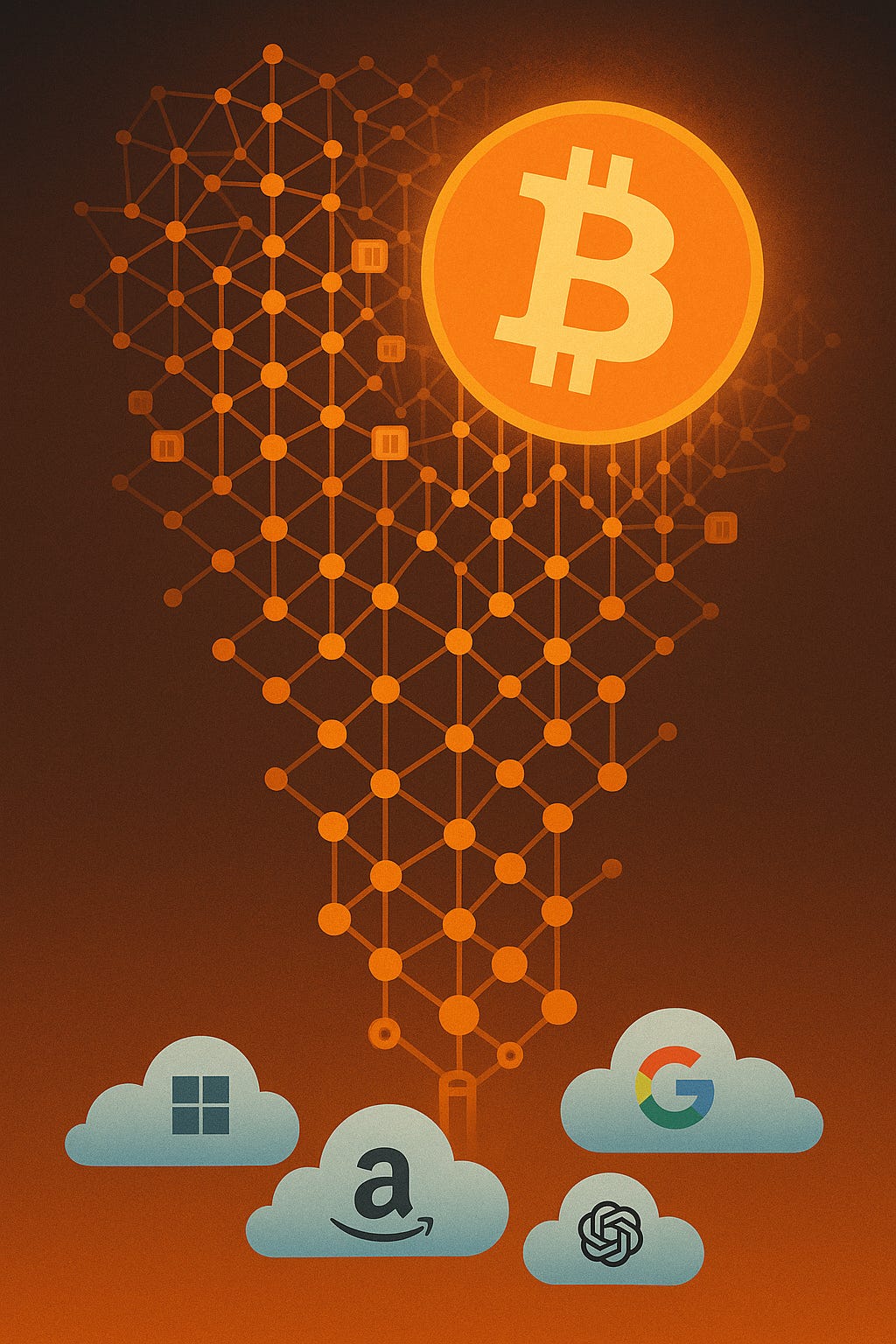

Bitcoin taught us something critical: distributed networks scale. Today’s Bitcoin network runs at ~765 exahashes per second8 — more computing power than Microsoft, Amazon, Google, and OpenAI combined. Five to six million mining machines worldwide, producing 700 quintillion hashes every second9.

The problem? 99% of that power is used to generate useless cryptographic puzzles for security purposes.

What if you could redirect that architecture toward useful AI computation?

The email showed us the same pattern. SMTP, launched in 198110, became the decentralized protocol that outlasted every proprietary competitor. No single company controls it. No government can shut it down. It just works — across borders, platforms, providers.

The internet itself followed this path. TCP/IP won because universities, the military, and tech companies could implement it freely while the OSI committee members were “still holding meetings”11.

Open protocols beat proprietary control when the value proposition is clear enough.

The Open Source Alternative

Here’s what people miss: the models already exist.

Qwen3 (Alibaba) outperforms DeepSeek-V3, Llama-4, and GPT-4o on code generation and complex reasoning12. DeepSeek Coder supports 300+ programming languages with state-of-the-art performance13. In medical applications, DeepSeek models match GPT-4o and Gemini-2.0 on clinical decision-making tasks14. Llama 3.1 405B proves open source can scale to GPT-4 levels15.

For specialized work: Qwen2.5 for research tasks, Llama 3.3 70B for clean prose, DeepSeek 67B for code-heavy projects. All open source. All are runnable on hardware you can own.

The barrier isn’t the models. It’s the infrastructure to run them at scale without corporate gatekeepers.

Why I’m Putting $8,000 on the Table

Next week (and I will document everything here), I’m launching a mining rig. Not for profit — though if the economics work out, great. I’m doing this because I’m tired of watching five corporations control who gets to build with AI.

The configuration:

2× Gigabyte RTX 4090 AORUS XTREME WATERFORCE GPUs (water-cooled for summer heat),

AMD Ryzen 9 7950X,

128GB DDR5 RAM,

to run 24/7 on the GONKA decentralized network.

Total VRAM: 48GB. Enough to run listed Qwen, DeepSeek, Llama models locally while contributing compute power to a global network anyone can access.

IMPORTANT! FIRST: I will run Qwen and DeepSeek models locally without asking anyone what to do or how to do it. SECOND: I will contribute processing power to the decentralized AI network.

GONKA launched a month ago with 80 GPUs. Today: 450 GPUs. Target for year one: 100,000 GPUs16. It’s proof-of-work for AI — 99% of computing power goes to useful inference, only 1% to network security. Open source protocol. Community-controlled.

The economics? Break-even might take 8 months or it might fail completely. I don’t care. This isn’t about ROI. It's the IDEA I need to follow, and I’ve got a pretty powerful computer for LLMs and even incredible gaming :)

This is about voting with resources for a world where a Vietnamese startup, a Ukrainian developer, or a Moroccan student can access AI infrastructure without permission from Silicon Valley or Washington.

The Bottom Line

We’re at a fork in the road. One path leads to AI as a controlled utility — five companies, one government, gatekeeping access to the most important technology of our generation.

The other path looks like email, like the internet, like Linux. Messy, distributed, imperfect — but belonging to everyone.

Decentralized networks for AI won’t replace cloud providers overnight. They don’t need to. They just need to prove that open infrastructure can work at scale.

That’s worth $8,000 of hardware. That’s worth the electricity costs, the setup time, and the uncertainty.

Because the alternative — where every country’s AI development requires export approval, where every business builds on rented infrastructure they’ll never control — that’s not a future I want to see.

What’s your take? Are we headed toward AI colonialism, or can decentralized infrastructure actually compete with centralized control?

Ibid. In 2024, the US added 5.8 GW of datacenter capacity vs the EU 1.6 GW, the UK 0.2 GW.

Internal analysis based on GONKA network interview transcripts (November 2025). Network launched 1 month prior to interview with 80 GPUs, reached 450 GPUs at time of interview, targeting 100,000 GPUs in year one.

Following! Interesting experiment for sure